Big Data

A Comprehensive Guide to Implementing an eCommerce Data Pipeline

If you want to start an eCommerce business, you need to think about various aspects such as your products, marketing, and branding.

Running an online business in the eCommerce industry means that you are running a business that creates a lot of data along the way. This data helps you stay competitive and make decisions based on facts instead of guessing. And we all know that guessing is never good in the world of business.

If you want to start an eCommerce business, you need to think about various aspects such as your products, marketing, and branding. But for all of that, you need to rely on data that you will collect as a vendor every day.

This data deals with website traffic, sales records, product details, inventory details, marketing efforts, advertising numbers, customer insights, and so on. Almost all operations related to your business generate various amounts of data.

But what should you do when you get overwhelmed with the amount of data that is being generated?

Well, you need to transform all data coming from your data sources into actionable insights that could mean a lot to your business. And you can do that with a data pipeline. Take a look below and learn more about eCommerce data pipelines and how they can benefit your business.

Table of Contents

1. What is a data pipeline?

A data pipeline is essentially a set of tools and activities used to move data from one process with its method of data storage and processing to another, where it can be stored and handled differently.

In the eCommerce realm, a data pipeline should be seen as an automated process of various actions used to extract and handle data from different sources into a format used for further analysis.

Take a look at various places where your business data can be gathered:

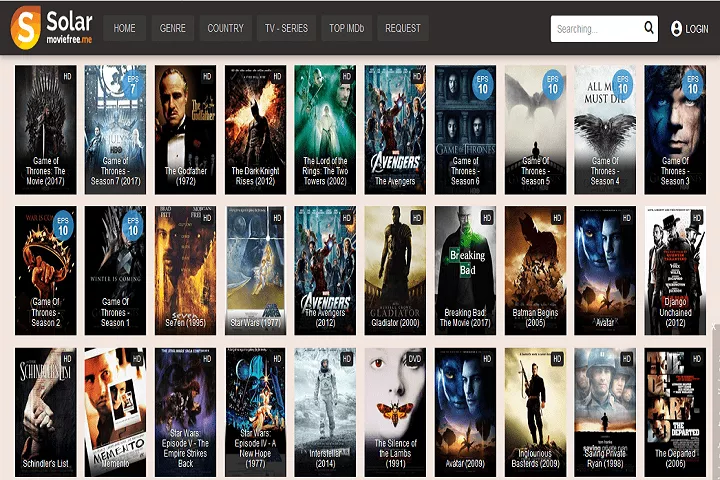

- Email marketing

- CRM

- Customer support apps

- Loyalty apps

- Operations and financial management platforms

- Your eCommerce platform

- POS system(s)

- Advertising platforms

- Other marketplaces or sales channels

A data pipeline allows a user to extract and move data from all disparate apps and platforms into one central place. It transforms it into a usable format for reporting across sources.

Therefore, all businesses that rely on multichannel insights need to recognize how a data pipeline can help them improve their processes. Remember, before one can extract valuable insights from the gathered data, they first need to have a way to collect and organize it.

2. ETL pipeline vs data pipeline

A lot of businesses that rely on this kind of technology also talk about ETL pipelines. Moreover, many have ditched the traditional pipelines and got ETL ones.

So, what is an ETL pipeline? And how is it separate from a traditional data pipeline?

An ETL data pipeline can be described as a set of processes that involve the removal of data from a source, its change, and then loading into the target ETL database or data warehouse for data analysis or any other need.

The target destination can be a data warehouse, data mart, or database. It is essential to note that having a pipeline like this requires users to know how to use ETL software tools. Some benefits of these tools include facilitating the performance, providing operational resilience, and providing a visual flow.

ETL stands for extraction, transformation, and loading. You can tell by its name that the ETL process is used in data integration, data warehousing, and data transformation (from disparate sources).

The primary purpose behind an ETL pipeline is to collect the correct data, prepare it for reporting, and save it for quick and easy access and analysis. Along with the right ETL software tools, such a pipeline helps businesses free up their time and focus on more critical business tasks.

On the other hand, a traditional data pipeline refers to the sets of steps involved in moving data from the source structure to the target system. This kind of technology consists of copying data, moving it from an onsite location into the cloud, and ordering it or combining it with various other data sources.

A data pipeline is a broader term that includes ETL pipeline as a subset and has a set of proceeding tools that transfer data from one process to another. Depending on the tools, the data may or may not be transformed.

3. Are there any other kinds of data pipelines?

Keep in mind that there are quite a few data pipelines that you could make use of. Let’s go through the most prominent ones that have already worked for many other businesses.

- Open-source vs proprietary. If you want a cheap solution that is already available to the general public, seeking open-source tools is the right way to go. However, you should ensure that you have the right experts at the office and the needed resources to expand and modify the functionalities of these tools according to your business needs.

- On-premise vs cloud-native. Businesses still use on-premise solutions that require warehousing space. On the contrary, a cloud-native solution is a pipeline using cloud-based tools, which are cheaper and involve fewer resources.

- Batch vs real-time. Most companies usually go for a batch processing data pipeline to integrate data at specific time intervals (weekly or daily, for instance).

This is different from a real-time pipeline solution where a user can process data in real-time. This kind of pipeline is suitable for businesses that quickly process real-time financial or economic data, location data, or communication data.

4. How to determine what data pipeline solution you need?

You might want to consider a few different factors if you want to determine your business needs’ exact type of data pipeline. Think through the whole business intelligence and analytics process at your company and ask yourself these questions:

- How often do we need data to be updated and refreshed?

- What kind of internal resources do we have to maintain a data pipeline?

- What is the end goal for our data?

- What types of data do we have access to?

- How should it be extracted, arranged, and maintained?

Keep in mind that you really can build a data pipeline all on your own. But connecting your various data sources and building a sustainable and scalable workflow from zero can be quite a feat.

If you want to do this and consider this option, think about what the process would look like and what it would take. A data pipeline consists of many individual components, so you will have quite a bit of thinking to do:

- What insights are you interested in?

- What data sources do you currently have access to/are using?

- Are you ready to gather additional solutions to help you with data storage reporting?

If all of this seems overwhelming, do not worry. Most businesses out there don’t have the expertise (or resources) to devise a data pipeline independently. However, if you connect the right expert to your data storage, you might achieve this goal. It will not happen quickly, but it will be worth it.

5. Final thoughts

Take another look at the most critical parts of this guide and evaluate your business needs. Only then can you be able to come up with the right solution for your business.

Again, if you can’t make up your mind and struggle between ETL and traditional data pipelines, know that ETL data pipelines are used for extraction, transformation, and loading, while data pipeline tools may or may not include change!

Keep this in mind since it can make a difference even though it is just one functionality.

Big Data

How To Use Histogram Charts for Business Data Visualization

One such tool for data visualization that stands out for its simplicity and effectiveness is the histogram. Keep reading to learn more about histogram charts.

Data visualization is an indispensable tool for businesses operating in the contemporary digital era. It enhances the understanding and interpretation of complex data sets, paving the way for informed decision-making. One such tool for data visualization that stands out for its simplicity and effectiveness is the histogram. Keep reading to learn more about histogram charts.

Table of Contents

1. Understanding the Basics of Business Data Visualization

Alt text: A person looking at different data visualizations on a computer before looking at a histogram chart.

Business data visualization is a multidisciplinary field merging statistical analysis and computer science fundamentals to represent complex data sets visually. It transforms raw data into visual information, making it more understandable, actionable, and useful.

Visualization tools such as histogram charts, pie charts, bar graphs, and scatter plots offer businesses a way to understand data trends, patterns, and outliers—essentially bringing data to life.

Whether you’re analyzing sales performance, forecasting market trends, or tracking key business metrics, data visualization can be a powerful tool for presenting data that might otherwise be overlooked.

2. Deciphering the Role of Histogram Charts in Data Analysis

Histogram charts are a superb tool for understanding the distribution and frequency of data. They are bar graphs where each bar represents a range of data values known as a bin or bucket. The height of each bar illustrates the number of data points that fall within each bucket.

Unlike bar graphs that compare different categories, histogram charts visually represent data distribution over a continuous interval or a particular time frame. This makes them invaluable for many business applications, including market research, financial analysis, and quality control.

By portraying large amounts of data and the frequency of data values, histogram charts provide an overview of data distribution that can aid in predicting future data trends. It helps businesses to spot patterns and anomalies that might go unnoticed in tabular data.

3. Key Steps To Create Effective Histogram Charts

Creating effective histogram charts involves steps, starting with data collection. You need to gather relevant and accurate data for the phenomenon you’re studying.

Next, you determine the bins and their intervals. Bins are data ranges, and their number depends on the level of detail you want from your histogram. It’s essential to keep bins equal in size to facilitate comparison.

Once you’ve structured your data and decided on the number of bins, the next step is to count how many data points fall into each bin. This is the basis of your histogram.

Finally, you draw the histogram with the bins on the x-axis and the frequency on the y-axis. Each bin is represented by a bar, the height of which represents the number of data points that fall into that bin.

4. Advantages of Using Histogram Charts for Business Data Visualization

Alt text: A person on a computer looking at multiple screens with histogram charts.

Histograms offer a host of advantages in the realm of business data visualization. They provide a clear, visual summary of large data sets, making it easier to digest and comprehend the data.

Histograms are also useful in identifying outliers or anomalies in data, which can be significant in industries such as finance and quality control, where an anomaly could signify a significant issue that needs to be addressed.

5. Real-world examples of Business Data Visualization Using Histogram Charts

Many businesses use histograms to visualize data. For instance, a retail company may use histograms to analyze customer purchase patterns, enabling them to identify peak shopping times, seasonality, and trends in customer preferences.

A manufacturing company might use histogram charts to monitor product quality. By analyzing the frequency of defects, they can identify the cause of the problem and take corrective actions faster.

Histograms are also widely used in the financial industry. Financial analysts use histogram charts to visualize the distribution of investment returns, helping them to understand the risk associated with an investment.

Histogram charts are crucial in business data visualization, offering clear and concise representations of large data sets.

Instagram3 years ago

Instagram3 years agoBuy IG likes and buy organic Instagram followers: where to buy them and how?

Instagram3 years ago

Instagram3 years ago100% Genuine Instagram Followers & Likes with Guaranteed Tool

Business4 years ago

Business4 years ago7 Must Have Digital Marketing Tools For Your Small Businesses

Instagram3 years ago

Instagram3 years agoInstagram Followers And Likes – Online Social Media Platform